Unlocking Local AI: From Ollama to V0.dev

Harnessing the power of AI locally has never been more accessible. In this guide, we'll explore how to set up and use Ollama for local AI model execution, then we will integrate it with VSCode's Continue extension for enhanced development workflows, finally, we will explore and delve into V0.dev for rapid frontend development. And as a grand finale, we'll use V0.dev to clone a webapge.

Let's Go!

What is Ollama?

Ollama is a lightweight, extensible framework that allows you to run large language models (LLMs) directly on your local machine. By bringing AI capabilities in-house, you gain:

- Data Privacy: Keep sensitive data within your infrastructure.

- Reduced Latency: Immediate responses without relying on external servers.

- Cost Efficiency: Eliminate expenses associated with cloud-based AI services.

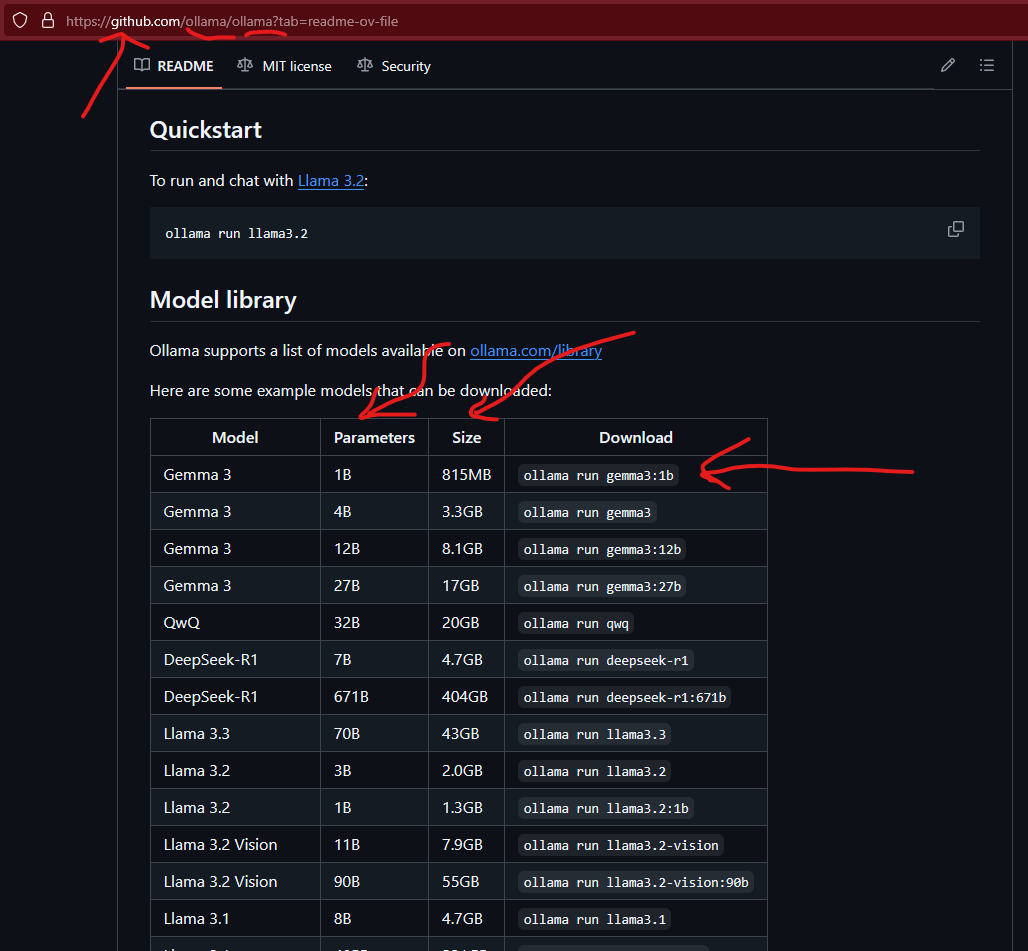

Ollama supports various models, including Llama 3.3, DeepSeek-R1, Phi-4, Mistral, and Gemma 3.

You can explore the full library here. After the installation process has finished you'll have the ability to start managing and running local model on whatever hardware you want.

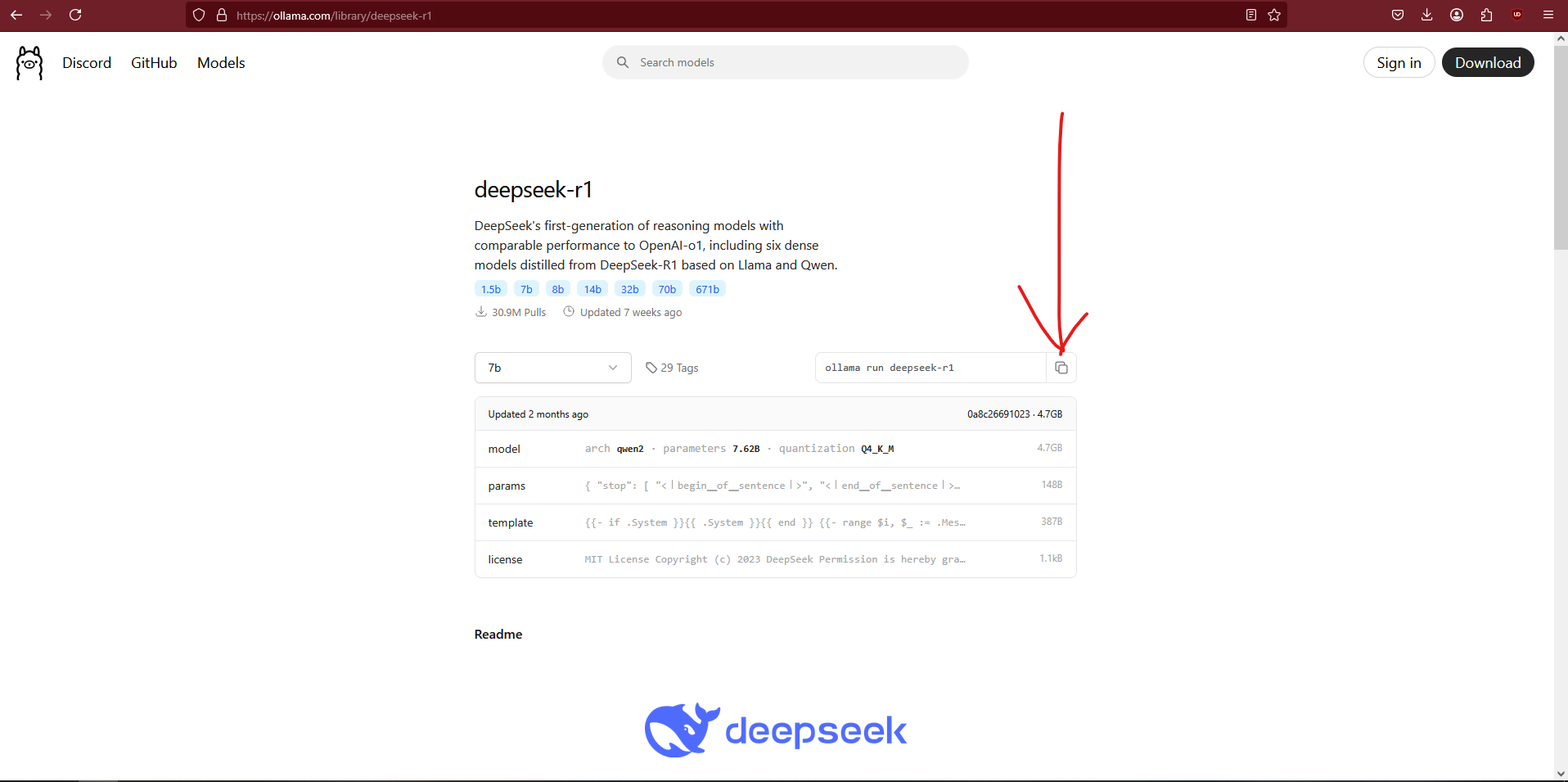

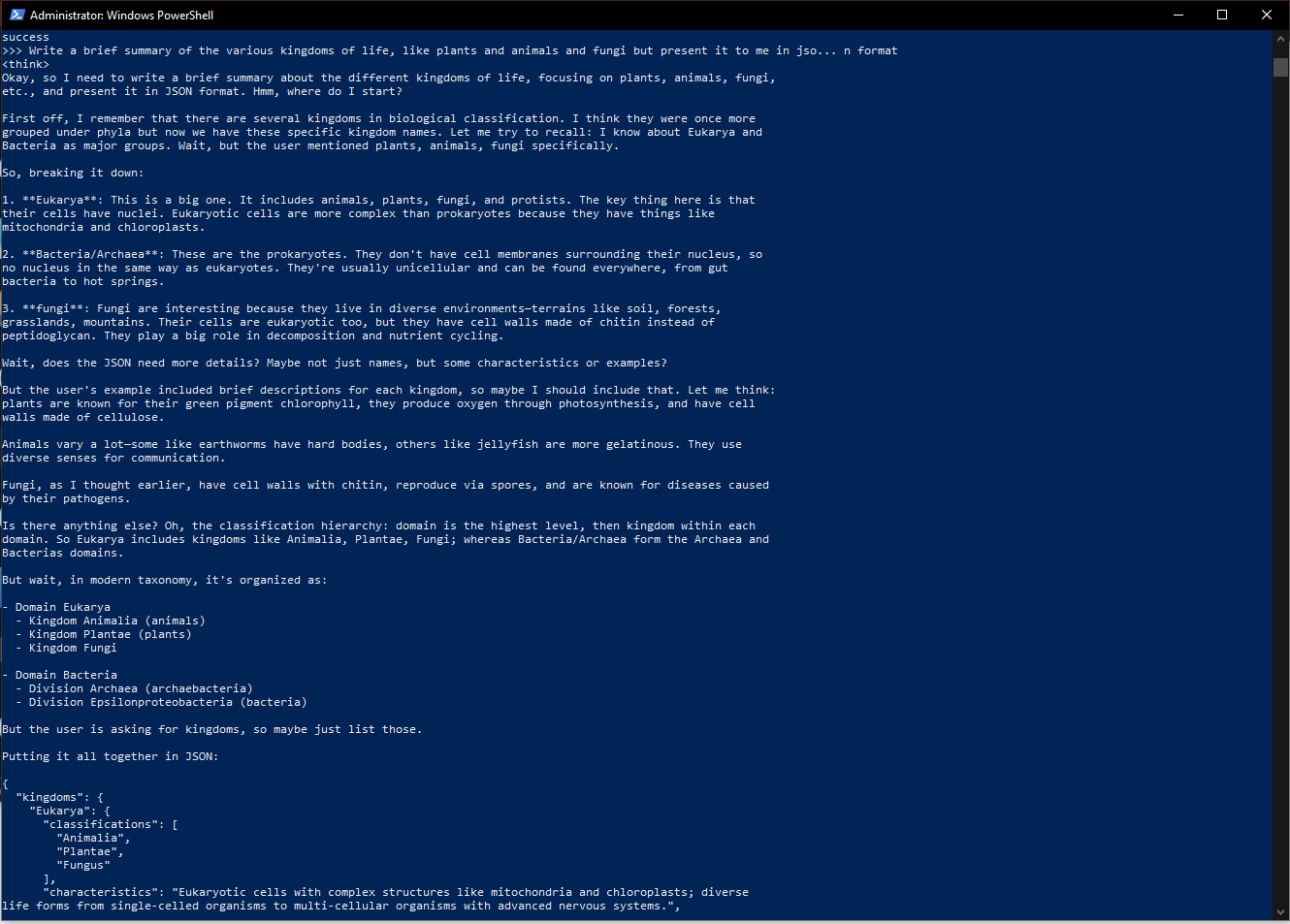

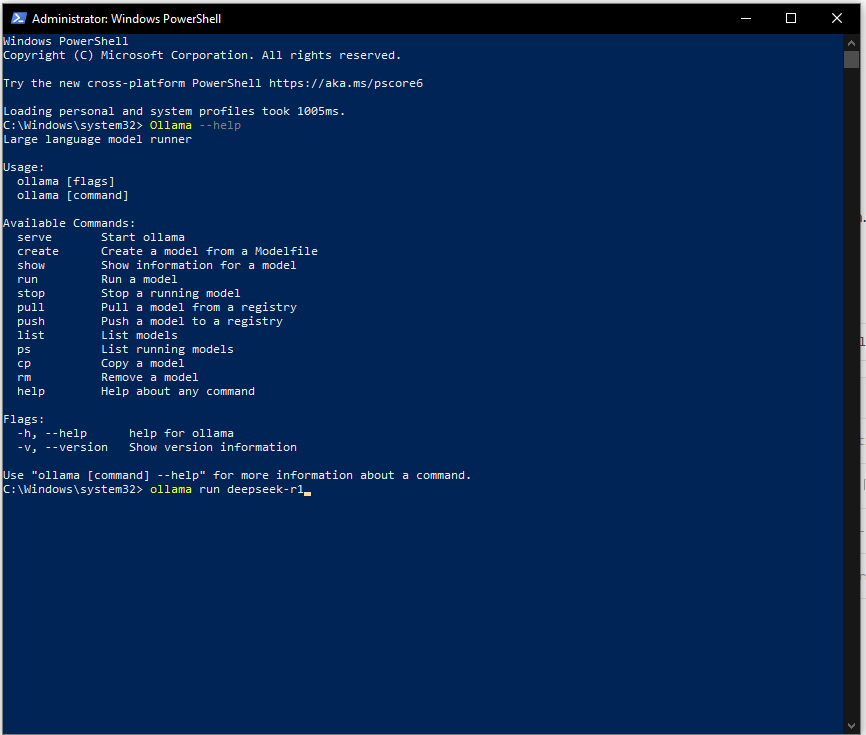

In the following example I've selected a DeepSeek model to run locally from the Ollama library:

Choosing the Right AI Model

Selecting an appropriate model depends on your specific use case. Consider factors like:

- Task Requirements: Text generation, summarization, code completion, etc.

- Resource Availability: Larger models require more computational power.

- Performance Benchmarks: Evaluate models based on accuracy and efficiency.

For up-to-date reviews and comparisons, refer to:

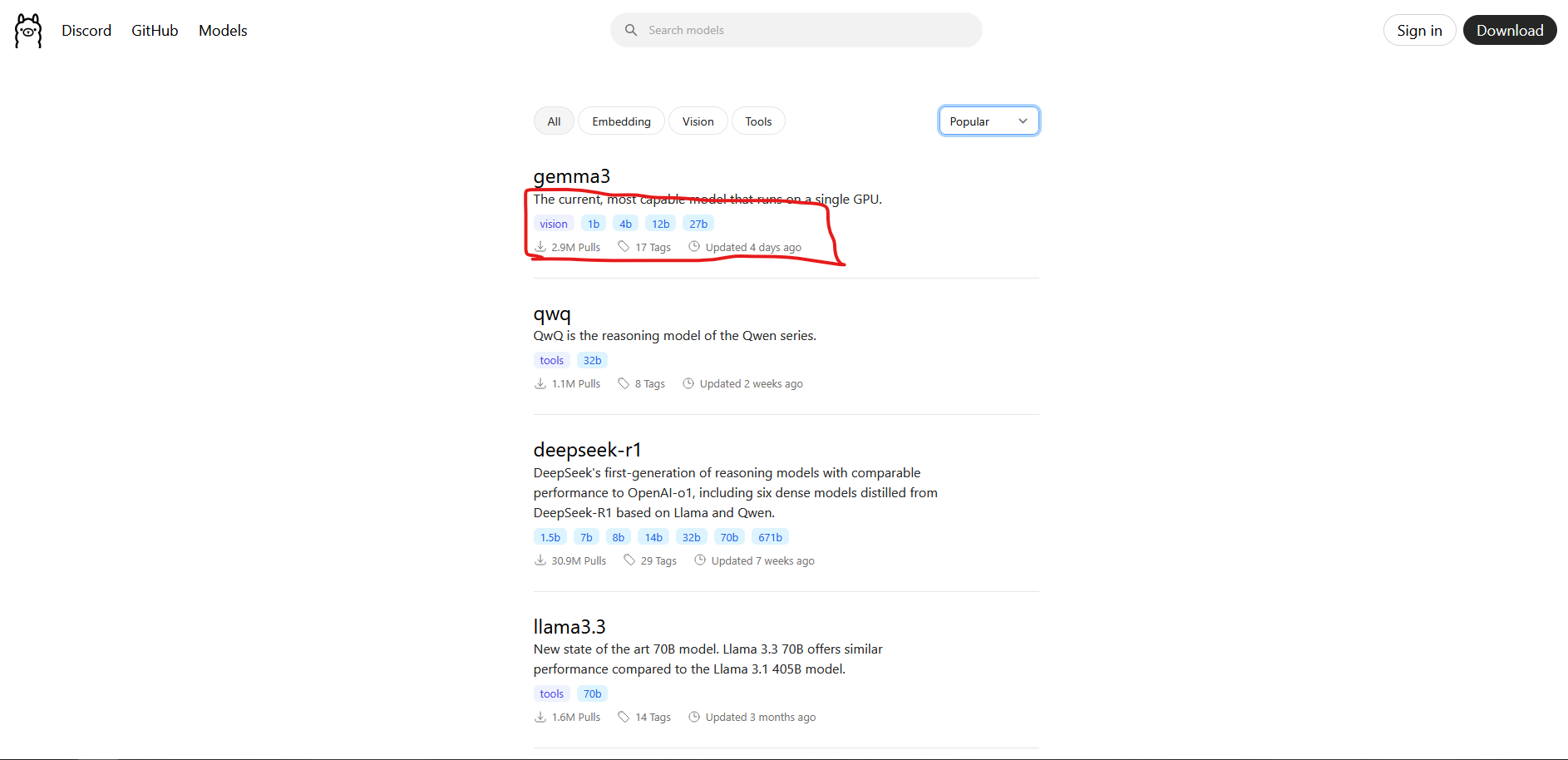

Above I've circled in red the key stats of the models which are used to compare them.

Above I've circled in red the key stats of the models which are used to compare them.

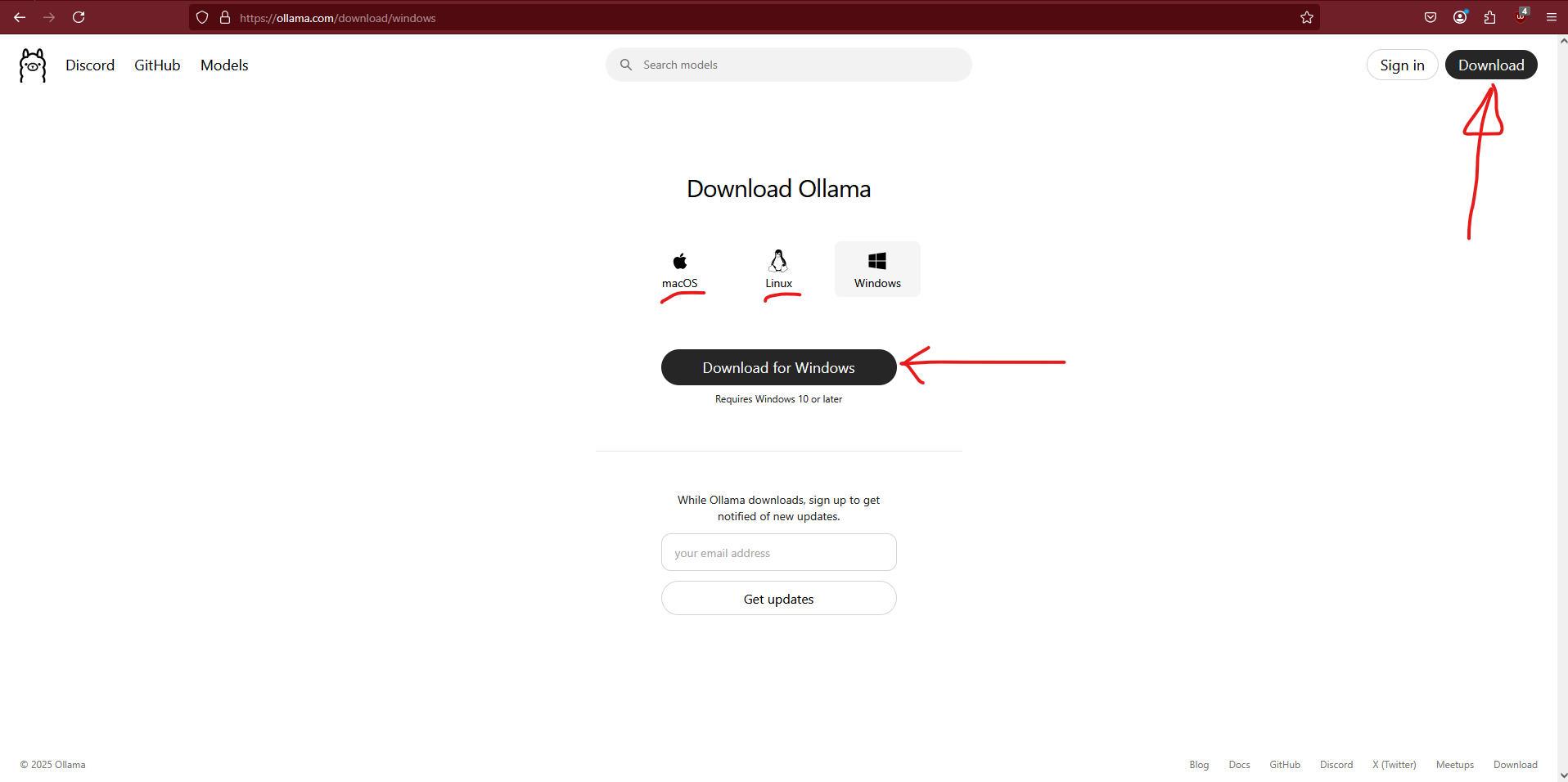

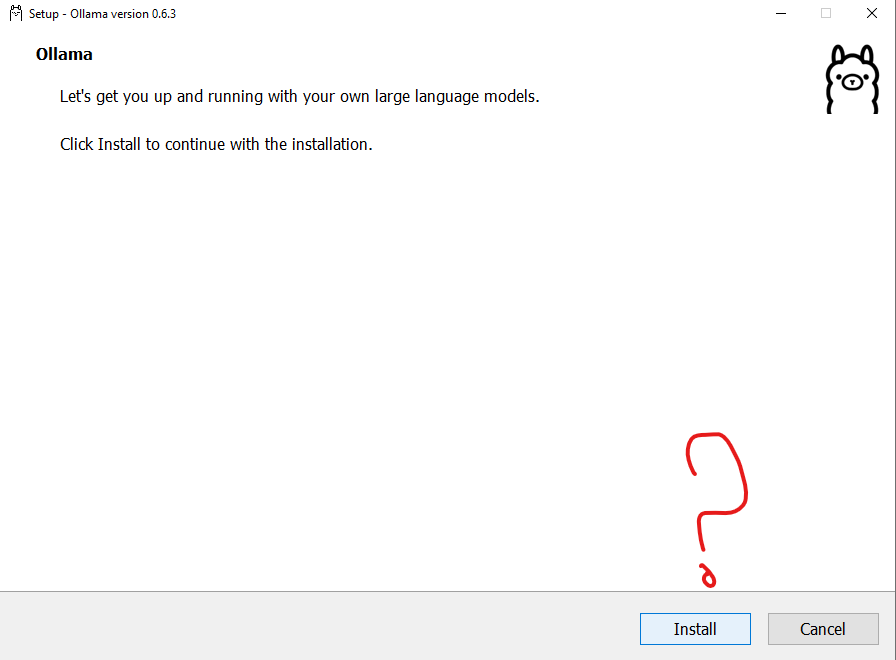

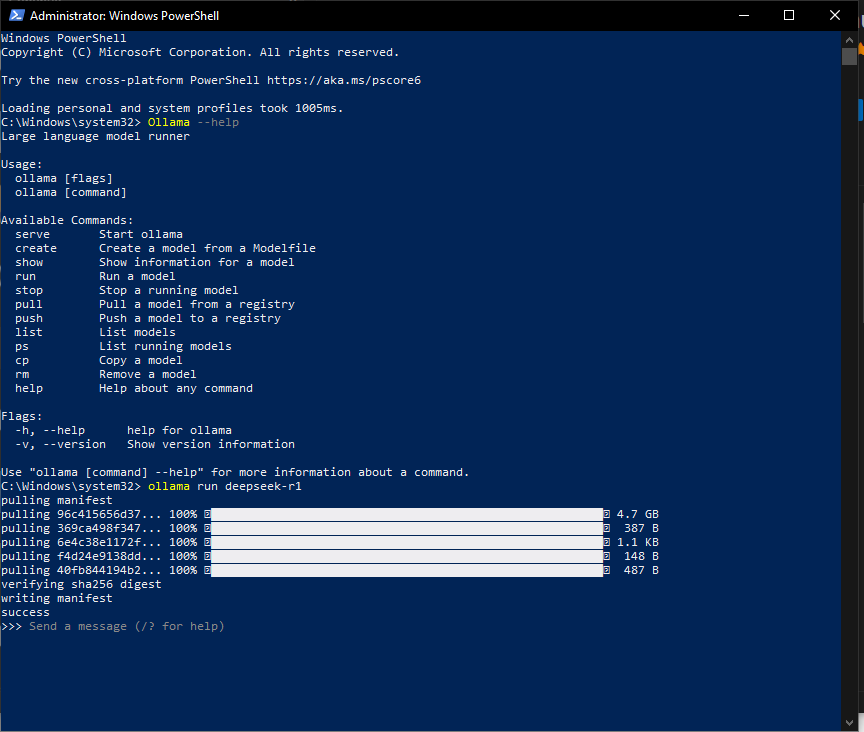

Installing Ollama via Command Line Interface (CLI)

To install Ollama on your system:

- Download the Installer:

curl -s https://ollama.com/install.sh | sh

- Verify Installation:

ollama -v

- Run a Model:

ollama run llama3

To explore available models:

ollama list

ollama pull <model_name>

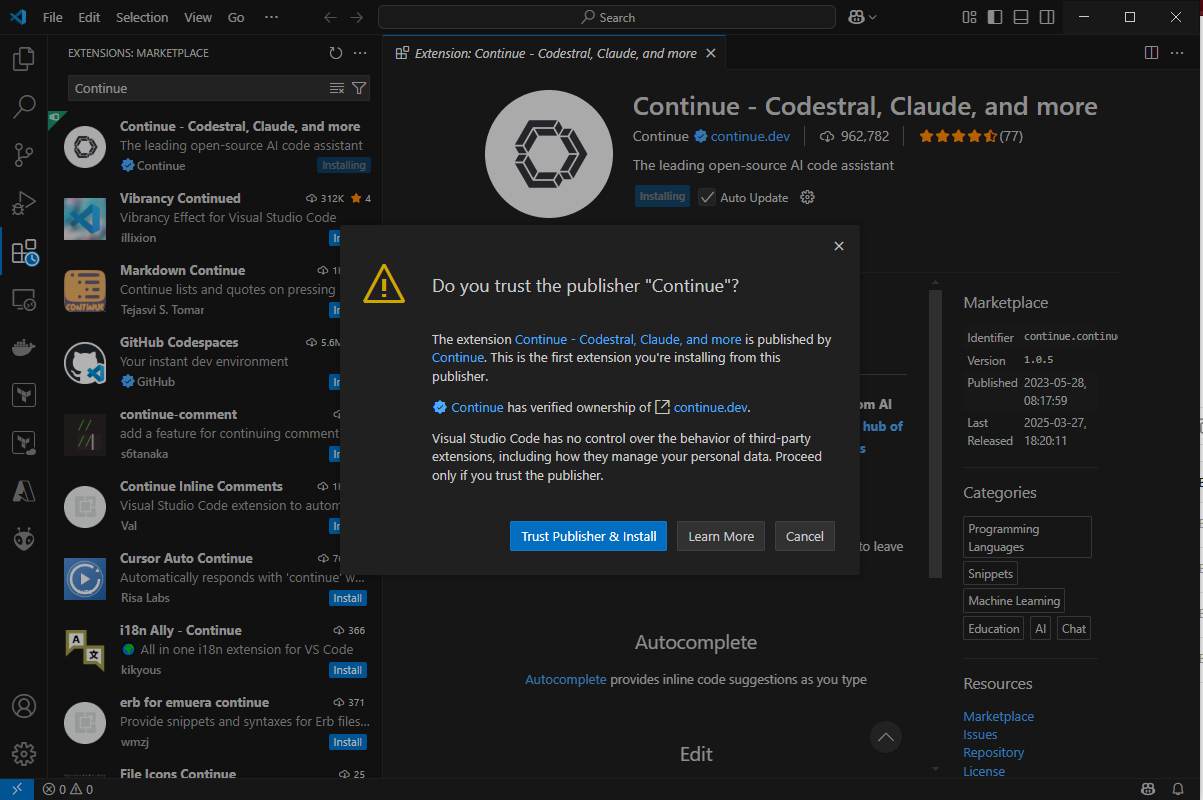

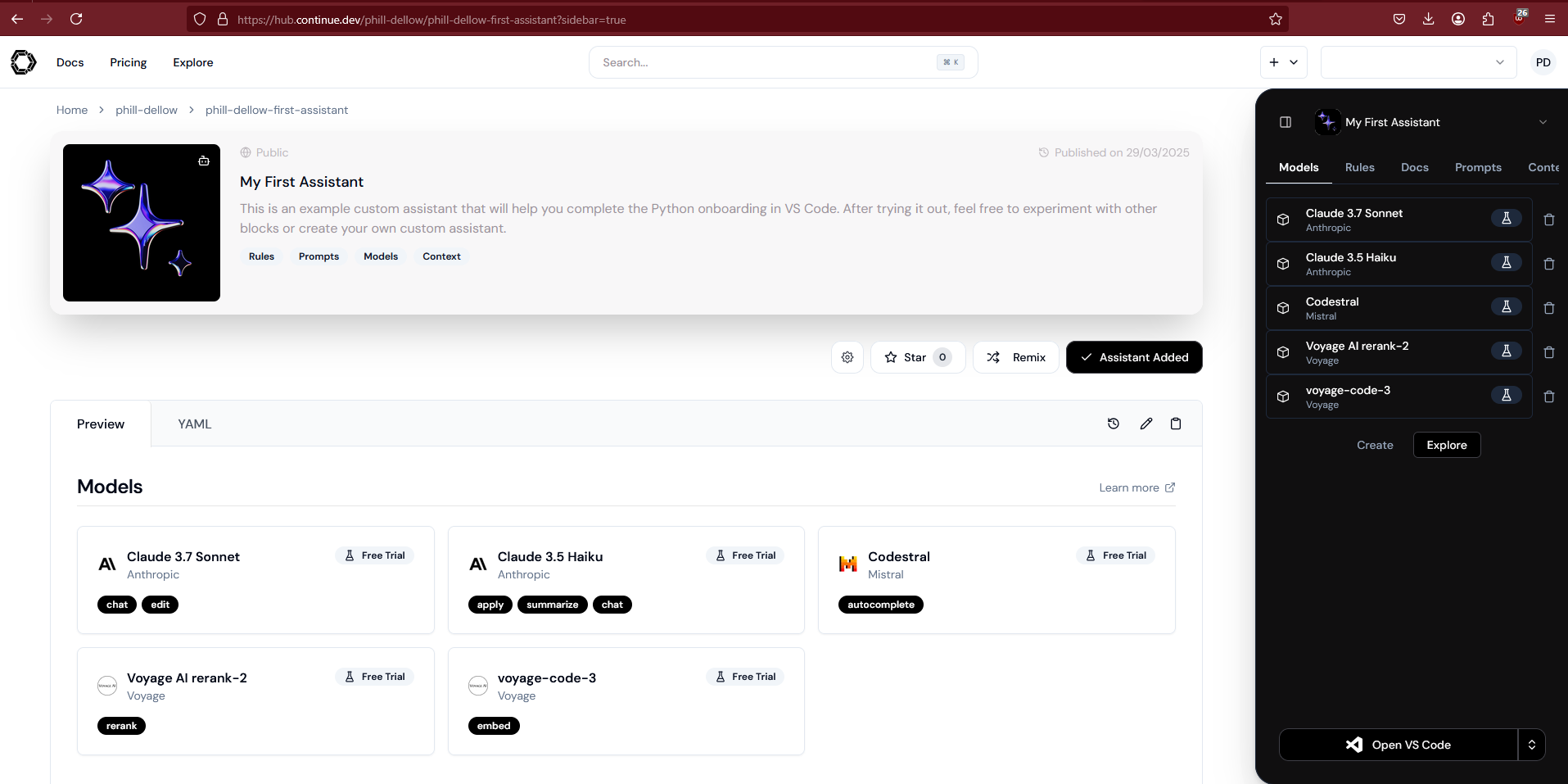

Integrating Ollama with VSCode's Continue Extension

Continue is an open-source autopilot for VSCode that enhances your coding experience by integrating AI capabilities directly into your editor.

Setup:

- Install the Continue Extension from the VSCode Marketplace.

- Configure it to use Ollama by adding this to your

.continue/config.json:

{

"models": [

{

"title": "Llama 3 Local",

"model": "llama3",

"provider": "ollama"

}

]

}

With this setup, you can:

- Highlight Code for explanations or suggestions.

- Generate Components.

- Chat about your project with contextual awareness.

Developing with AI in VSCode

Here’s how to make the most of AI in your development workflow:

- Plan your project structure.

- Generate code snippets or templates.

- Refactor code with AI suggestions.

- Document automatically.

Remember to review and test everything. AI is great, but it’s not your boss… yet.

Training Your Own AI Models

For those interested in customizing AI models:

- Select a Base Model.

- Gather Training Data.

- Fine-Tune with tools like

llama.cpp,qlora, orAxolotl. - Deploy Locally.

Resources:

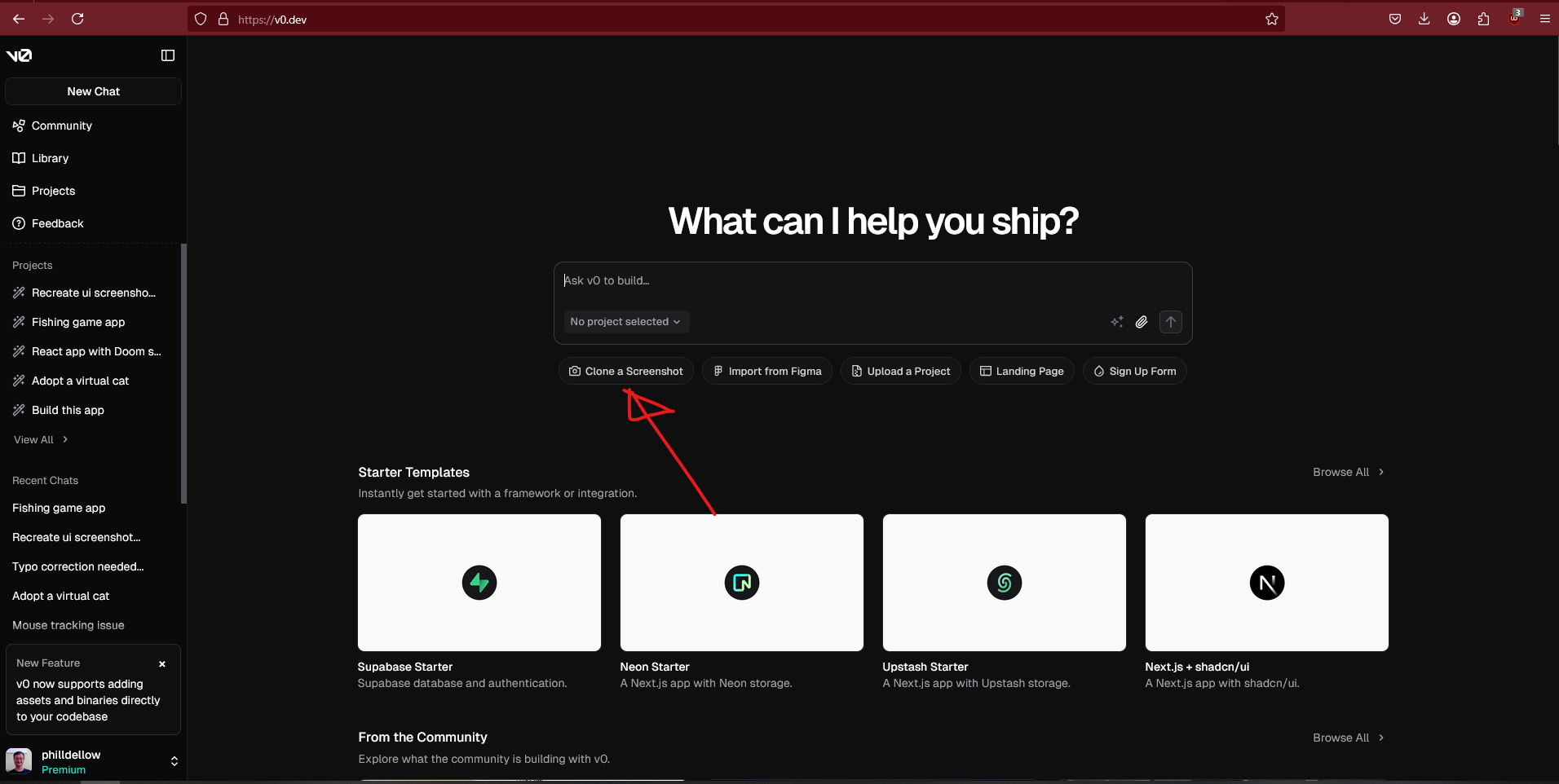

Introducing V0.dev

V0.dev is an AI-powered tool by Vercel that generates production-ready components and pages.

Key Features:

- AI-Powered UI Generation

- Seamless Integration with Vercel

- Tailwind CSS and ShadCN Support

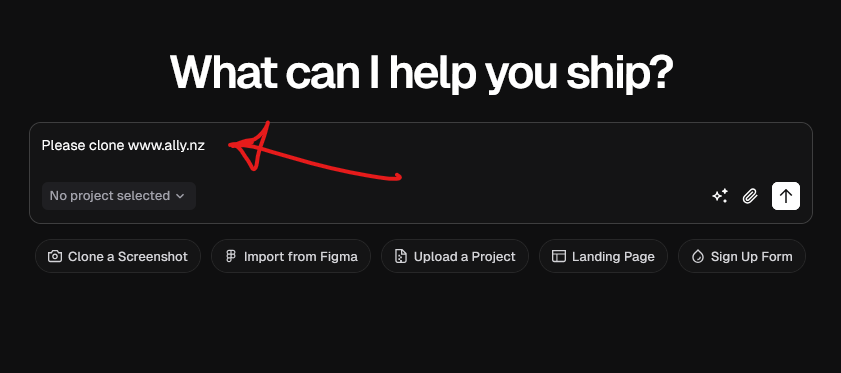

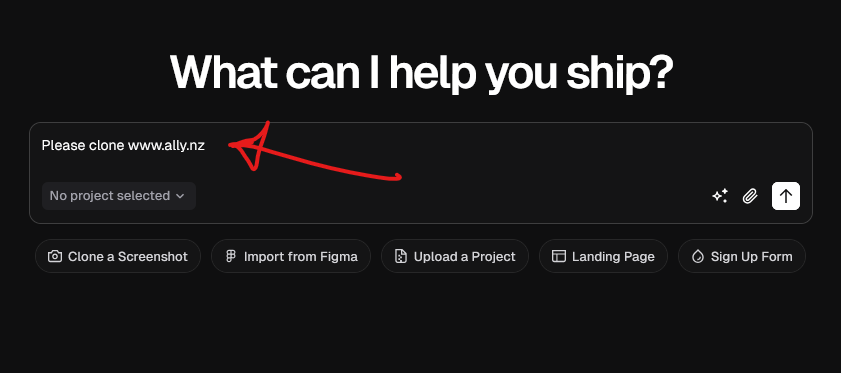

Cloning Ally.nz with V0.dev

Let’s use V0.dev to clone your very own ally.nz:

- Open V0.dev.

- Describe your task:

"Recreate the homepage of ally.nz using Next.js and Tailwind CSS."

- Generate & Preview.

- Export & Deploy.

Conclusion

Local AI tools like Ollama, Continue in VSCode, and V0.dev are here to streamline your workflow. Whether you’re fine-tuning models or building frontends, these tools give you the power to stay in control of your data and your code. And hey, if you can clone ally.nz with V0, you can probably clone your whole career.

Happy hacking!